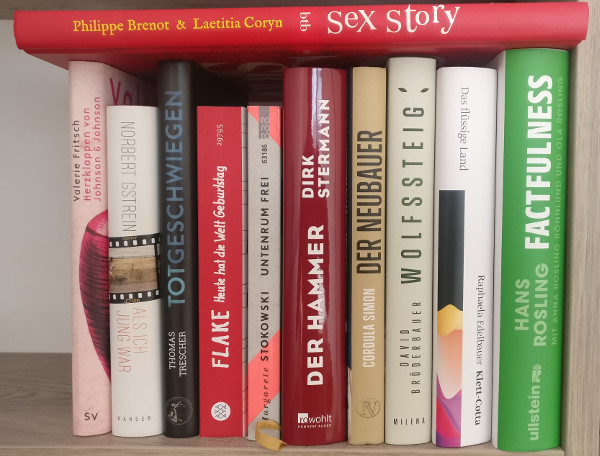

Bookdump 01/2020

February 25th, 2020Bücher, die ich 2020 bisher gelesen habe:

- Factfulness, von Hans Rosling mit Anna Rosling Rönnlund und Ola Rosling. Der 2017 verstorbene Hans Rosling ist u.a. für seine Ted Talks bekannt und hat an diesem Buch bis kurz vor seinem Tod geschrieben. In diesem ~380 Seiten starken Buch beschreibt Rosling mit seinem Team, warum die Aufteilung in »der (reiche) Westen« und »Entwicklungsländer« seiner Meinung nach falsch ist. Stattdessen teilt Rosling die Welt in vier Einkommensniveaus (Stufen 1 bis 4) ein, und demonstriert, wie überheblich und falsch die Ansichten von Leuten aus der einkommensreichsten Schicht (Stufe 4) sind. Gleichzeitig verursachen die Menschen aus Stufe 4 auch den Großteil der vom Menschen verursachten CO2-Emissisionen der letzten 50 Jahren. Er weist auf die Gefahr von einzelstehenden (Prozent)Zahlen und Vergleichen von Durchschnitten, Mehrheiten sowie Extremen hin, argumentiert warum unser Weltbild durch verzerrte und überdramatisierte Nachrichten falsch geprägt wird und der Blick auf die rosige Vergangenheit meist getrübt ist. Das kritische Interpretieren von Trends, der Umgang von Angst kontra Wirklichkeit wie auch der Zusammenhang zwischen dem Einkommen und der Zahl der Kinder, die eine Frau auf die Welt bringt gehören ebenso zu den behandelten Themen. Das Buch versucht einen positiven Blick auf die Welt zu vermitteln, Rosling nannte sich selbst einen Possibilisten, wobei ihm auch der Vorwurf von Pollyannaism gemacht wird.

Ich las »Factfulness« zu einer Zeit, in der die Feuer in Australien riesige Flächen vernichtet und für dramatische Bilder gesorgt haben. Roslings Kritik an “Klimaflüchtlingen” bekommt damit für mich einen interessanten Drall (besonders, weil Australien die Flüchtlinge aus dem Südpazifik in der Mitte von 2019 selbst nicht annehmen wollte). Unschön finde ich das Kleinreden von Zahlen rund um z.B. extreme Armut und Säuglingssterblichkeit. Die Zahlen sind weltweit tatsächlich so niedrig wie noch nie, aber die mehreren hundert Millionen Menschen in extremer Armut existieren nach wie vor und sind damit noch nicht aus der Welt. Die angeführten Umfrageergebnisse, in denen die Antworten unterschiedlicher Länder und Personenkreise dann jeweils gegen Schimpansen verglichen werden (die repräsentativ für eine Zufallsauswahl stehen sollen) empfinde ich als hochnäsig und ein Vermischen von Zufall mit dem Einschätzen einer Situation (hallo, Statistik). Roslings sakrosankte Einkommenseinteilungen stehen für mich teilweise in Widerspruch mit der Kluft zwischen Arm und (Super)Reich innerhalb der einkommensreichsten Schicht (Stufe 4).

Die Themen wiederholen sich immer wieder, besonders gegen Ende hin wurde es dann noch repetitiver. Auch hier gilt wieder einmal: weniger wäre mehr gewesen – ein kompakteres, dichter geschriebenes Buch hätte bei mir einen besseren Eindruck hinterlassen.

Jeweils am Kapitelende findet sich eine Zusammenfassung, es gibt einen brauchbaren Index und eine ausführliche Liste der verwendeten Quellen. Eine gute Intention ist dem Buch auch nicht abzusprechen und es braucht vermutlich Personen wie Rosling, um die breite Masse zu erreichen. Ich hatte mir vorab ein schwierig(er) zu lesendes Buch erwartet, aber es ist sehr zugänglich und plakativ geschrieben, daher kann ich das Buch durchaus als Basis für das Hinterfragen des eigenen Weltbilds empfehlen. Rosling würde mir vermutlich auch nicht widersprechen, wenn ich schreibe, dass das Buch zum weiteren Recherchieren anregt, denn mit den im Buch angeführten und von dem Team rund um Rosling gepflegten Dollar Street und den Gampinder Tools kann man sich über das Buch hinausgehend mit der Thematik beschäftigen. - Das flüssige Land, von Raphaela Edelbauer. Die Eltern der theoretischen Physikerin Ruth Schwarz sterben bei einem Unfall. Die Protagonistin landet auf Umwegen in einem österreichischen Dorf namens Groß-Einland, in dem ihre Eltern aufwuchsen, das offiziell aber gar nicht existiert. Die akademische Städterin versucht mehr über die Verankerung der Eltern in der dörflichen Gemeinde herauszufinden. Sie wandelt dabei auf den Spuren ihren Eltern und taucht in die Dorfgeschichte wie auch ihre eigene Familiengeschichte ein, während ein Loch das Dorf zu verschlingen verdroht.

Die kafkaeske und sprachmächtige Geschichte beinhaltet genug Stoff für unterwartete Wendungen, handelt vom Nationalsozialismus und der Unerinnerungskultur Österreichs und spielt – so zumindest meine Interpretation – auf das Grubenunglück von Lassing an. 350 wortgewaltige Seiten. - Wolfssteig, von David Bröderbauer. Der studierte Biologe und praktizierende Botaniker Bröderbauer schreibt in diesem 263 Seiten umfassenden Roman über den fiktionalen, aber an Allentsteig angelehnten Truppenübungsplatz Wolfssteig im Waldviertel. Die Protagonisten sind Christian Moser und Ulrich Bruckner: Moser, ein bei einer Bundesheerübung Verletzter wird als Invalide zum Hausmeister eines Asylheims. Bruckner, ein aus der Großstadt zurückkehrender Biologe, forscht an Birkhühnern und soll diese an besagtem Truppenübungsplatz zählen, als dieser geschlossen wird.

In diesem Buch geht es um diverse gesellschaftskritische Themen, von Naturschutz und Interessenkonflikten (u.a. zwischen Bauern, Jägern, Landwirten, Naturschützern und Ansässigen), über Landflucht und Orientierungslosigkeit, bis hin zu Fremdenfeindlichkeit und Religion. Bröderbauer schafft dies, ohne sich zum Moralapostel aufzuschwingen. Im ersten Teil gibt es mehrere mich irritierende Vorkommen von “als” vs “wie”, und streckenweise finde ich die Formulierungen nicht so gelungen. Besonders die sprachlichen Ausflüge in die Natur und die gezeichneten Gesellschaftsbilder finde ich aber ansprechend und regen zum Reflektieren an. Bröderbauer gelingt es, viele Themenkomplexe mit nur wenigen Protagonisten und ohne kompliziert konstruierter Geschichte drumherum anzusprechen. - Der Neubauer, von Cordula Simon. “Schlechten Menschen geht es immer gut”. Nach diesem – im Buch immer wieder kehrenden – Motto lebt der namenlose Ich-Erzähler dieses Buches, der sich irgendwie durchs Leben schlägt und mogelt. Der Lebenskünstler verfügt dabei über eine besondere Gabe: Alkohol lässt ihn die Gedanken seiner Mitmenschen lesen.

Die 200 Seiten beinhalten Brachialrhetorik in schneller Sprache, schöne Wortkreationen und eine zeitkritische Auseinandersetzung mit Hipstern, Millenials, Blendern und den Unterschieden zwischen verschiedenen Gesellschaftsschichten. Es gibt viel Zynismus mit einer Portion Witz und einen Rundumschlag in dem jede(r) sein Fett ab kriegt (Vegetarier/Veganer, Dicke, Vermögende, Frauenrechte, …). Das Buch macht keine gute Laune, aber Spaß beim Lesen. - Der Hammer, von Dirk Stermann. In diesem 444-seiten umfassenden historischen Roman geht es um das Leben des Joseph Freiherr von Hammer-Purgstall. Joseph Hammer lebte von 1774 bis 1856 als ältester von acht Kindern und war ein sprachbegabter Freund und Anhänger der Orientalistik. Der übermäßig Selbstbewusste scheitert, vor allem aufgrund seiner undiplomatischen Art, an seinem Ziel, den Orient zu beruflich zu beackern. Im Zuge dessen erfährt man allerlei rund um die Politik des 18. und 19. Jahrhundert, etwa vom Wiener Kongress und den Napoleonischen Kriegen, aber auch das von Kot und Dreck geprägte Leben zu dieser Zeit. Der Jesuit Hammer trifft auf Staatsmänner wie Napoleon und Metternich, aber auch Künstler wie Beethoven und Haydn. Er notiert sich anerkennende Worte seine Person betreffend in seinem “Lobbuch” (eine Erfindung Stermanns, die ich aber besonders charmant finde).

Ein auf realen Tatsachen basierendes und von Details strotzendes Buch mit Graz-Bezug (Grätz), das viele Fakten über die österreichische Geschichte beinhaltet. Mein Lesetempo war aufgrund der Faktendichte nicht so hoch wie üblich und es hat auch rund 40 Seiten gebraucht, um mich auf den Schreibstil einzustellen. Interessanterweise sind mir auch eine ganze Zeit lang die Guillemets in der französischen bzw. schweizerischen Verwendung aufgefallen. Gelesen habe ich es aber sehr gerne und empfand es als sehr gut recherchiertes Buch (laut Stermann hat er 1,5 Jahre für die Recherche und 2 Jahre für das Schreiben am Buch aufgewendet), verpackt in eine interessante Schreibweise. Absolut lesenswert. - Untenrum frei, von Margarete Stokowski. Vom Klappentext: “Wie frei und gleichberechtigt sind wir? […] Stokowski zeigt, wie sich Rollenbilder und Schamgefühle manifestieren, wie sie uns einschränken – und dass wir sie loswerden können.”

Stokowski erzählt sehr offen über eigene Erfahrungen und Alltagsbeobachtungen. Es gelingt ihr, geschichtlichen Kontext sehr geschickt und zugänglich in insgesamt 7 Kapiteln zu verpacken. Ich habe die 252 Seiten sehr gerne gelesen, klare Leseempfehlung. - Heute hat die Welt Geburtstag, von Flake. Christian “Flake” Lorenz ist der Keyboarder von Rammstein. In diesem, an einen Schelmenroman angelehnten, Buch erzählt er aus seiner Zeit in der Punkband Feeling B. und später bei Rammstein. Die 352 Seiten sind schnell gelesen und in einem kurzweiligen und ein wenig verpeilten Stil gehalten. Es geht um die DDR, Punk sowie viel Feuer, Alkohol, Essen und Klauereien. Flake wirkt naiv aber auch selbstironisch und so verrückt sein (Band)Leben zu sein scheint, er scheint ein sympathischer Chaot zu sein.

- Sex Story – eine Kulturgeschichte in Bildern, von Philippe Brenot und Laetitia Coryn. Eine Leihgabe eines Nachbars (danke C.!), ist dies ein Sachbuch in Comicform, das in 12 Kapiteln und rund 200 Seiten, angefangen vom Ursprung und Babylon, über das Mittelalter bis hin zum 20. Jahrhundert und einen Ausblick auf “Sex der Zukunft” das Thema Sexualität aufbereitet. Es ist schön und witzig umgesetzt und es gibt allerlei interessante Fakten rund um die Kulturgeschichte. Negativ aufgefallen ist mir lediglich die flappsige Formulierung, dass die “Zirkumzision [Anm.: männliche Beschneidung] ohne großen Schaden für die Männer weiterhin praktiziert wird”. Uff.

- Tot geschwiegen – Warum es der Staat Mördern so leicht macht, von Thomas Treschner. Im Klappentext des Buches sind “Gerichtsmediziner schätzen, dass jeder zweite Mord unentdeckt bleibt” sowie “ein True-Crime-Buch mit vielen Fallbeispielen über das Versagen eines Systems, das sich lieber mit niedrigen Mordraten schmückt, als die Leichen im Keller zu suchen” zu lesen.

Die Kritik am System kommt an und durch, allerdings sind die 214 Seiten in einem geschwätzigen und langatmigen Schreibstil mit unnotwendigen Details gehalten, die es für mich leider zu einer schlechten Mischung aus Sachbuch und Roman machen. Die Fakten sind zwar interessant, ebenso wie einiges geschichtliches zur Gerichtsmedizin, allerdings gibt es keinerlei Quellenangaben. Treschner kritisiert die österreichische, speziell die Wiener Gerichtsmedizin, allerdings wäre mir das in Form eines Artikels im Falter lieber gewesen, als mich durch diese über 200 Seiten zu quälen. - Als ich jung war, von Norbert Gstrein. In dem Buch gibt es zwei parallele Handlungsstränge aus der Sicht des Ich-Erzählers Franz, einmal in einem Hotelrestaurant in Tirol, wo sich Franz als Fotograf von Hochzeiten betätigt. Das andere Setting spielt in Wyoming/US, wo Franz als Skilehrer eines Professors arbeitet. Es gibt die Mutter von Franz, die ihren Selbstmord immer wieder ankündigt, einen Professor der sich das Leben nimmt, und eine Braut die sich am Tag ihrer Hochzeit mutmaßlich das Leben genommen hat. Franz ist einer der Verdächtigten, da er als einer der letzten die Braut lebend gesehen hat. Und Franz küsste ein (sehr) junges Mädchen gegen dessen Willen, als er selbst Anfang 20 war.

In dem 349 Seiten umfassenden Buch geht es um Pädophilie, Verlogenheit, Lebenslügen, Familiengeschichten und “Frauen schubsen”, geschrieben in einer klaren und präzisen Sprache, literarisch wunderschön und durchaus auch krimi-ähnlich spannend zu lesen. Klare Leseempfehlung. - Darm mit Charme: Alles über ein unterschätztes Organ, von Giulia Enders. Ich hatte die 23. Auflage vom Stand 2014 mit rund 280 Seiten zur Verfügung (eine Leihgabe – und da bereits zurückgegeben fehlt diese auch am Foto oben – danke Silvia!). Ein paar wenige Fakten bzw. Neuigkeiten rund um unser Verdauungsorgan gab es für mich, aber insgesamt gab es mir zu viel Palaver, zu viele Wiederholungen und es war für mich langatmig zu lesen. Ein faktenreicher und dichter Zeitungsartikel wäre mir auch hier wieder einmal lieber gewesen. Ich kann mich des Eindrucks nicht erwehren, dass das Buch in Folge von Giulia Enders Science-Slam-Vortrag 2012 entstanden ist und in Folge gehypt wurde. So unterhaltsam der Vortrag sein mag, so sehr hat mich das Buch leider gelangweilt.

- Herzklappen von Johnson & Johnson, von Valerie Fritsch. Alma und Friedrich Gruber bekommen einen Sohn namens Emil, der keinen Schmerz empfinden kann. Dieses Setting ist in eine Geschichte mit den Großeltern, rund um den Themenkomplex Krieg gebettet. Emil und die Großeltern wirken wie Antipoden, Gegengestalten der Familiengeschichte. Eine interessante Fragestellung, wie man einem Menschen der keinen körperlichen Schmerz fühlt, beibringt, was weh tut.

Die 175 Seiten sind sprachlich dicht und intensiv und haben mich schwer begeistert. Klare Leseempfehlung.